Disruption Doesn't Care About Your Model

From IBM 1979 to DeepSeek R1: The Real AI Story Isn't About Technology

Dear Friend –

I am acutely aware that we tend to report predominantly on AI-related news these days. It surely is a sign of our times (and an indicator of where the heat, interest, and thus money is these days). As Steve Jobs once compared the computer to a “bicycle for the mind,” AI feels (and in some/many cases is already) like the fully propped-up turbo version of that bicycle. Personally, I have integrated AI firmly into a whole bunch of my workflows and already have a hard time imagining living without it.

How about you? AI yay, nay, or something in between for your personal use case?

And with that question in the air – here’s the latest…

Headlines from the Future

The Future Belongs to Idea Guys Who Can Just Do Things ↗

Geoffrey Huntley on the impact of AI on coding tasks and jobs (in his case).

I seriously can’t see a path forward where the majority of software engineers are doing artisanal hand-crafted commits by as soon as the end of 2026. If you are a software engineer and were considering taking a gap year/holiday this year it would be an incredibly bad decision/time to do it.

It’s a well-put-together piece of thought – even if you are not a developer (maybe even more so, unless your job solely relies on your manual labor skills). Highly recommended to read and reflect upon.

This is highly likely to be true (and exciting – at least for some of us):

If you’re a high agency person, there’s never been a better time to be alive…

—//—

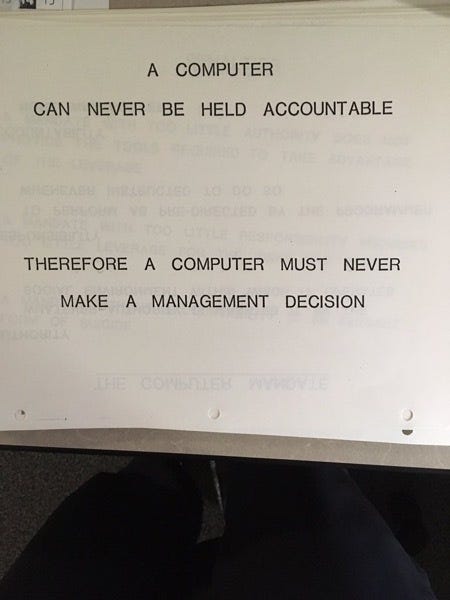

Good Reminder… ↗

From a 1979 presentation at IBM. Via Simon Willison.

—//—

The Barbarians at the Gate, or - The $6 DeepSeek R1 Competitor ↗

First, we had pretty much every AI company in the world arguing for the necessity of investing billions of dollars to train their models. Then we had the Chinese hedge fund-created DeepSeek R1 claim that you can create a state-of-the-art model for a mere six million USD. And now we have a hotly discussed paper showing that you can create near-state-of-the-art performance for a mere $6 (yes, that’s six dollars) in training costs (and using an open-source foundational model as its base).

A new paper released on Friday is making waves in the AI community, not because of the model it describes, but because it shows how close we are to some very large breakthroughs in AI. The model is just below state of the art, but it can run on my laptop. More important, it sheds light on how all this stuff works, and it’s not complicated.

The part of the sentence that reads “but because it shows how close we are to some very large breakthroughs in AI” is the important one.

Link to Tim Kellogg’s analysis.

—//—

AI Is Disruptive. And Disruption Is Different. ↗

Benedict Evans, writing about “Are better models better?” makes a very important point, which, of course, traces back all the way to Clayton Christensen:

Part of the concept of ‘Disruption’ is that important new technologies tend to be bad at the things that matter to the previous generation of technology, but they do something else important instead. Asking if an LLM can do very specific and precise information retrieval might be like asking if an Apple II can match the uptime of a mainframe, or asking if you can build Photoshop inside Netscape. No, they can’t really do that, but that’s not the point and doesn’t mean they’re useless. They do something else, and that ‘something else’ matters more and pulls in all of the investment, innovation and company creation. Maybe, 20 years later, they can do the old thing too - maybe you can run a bank on PCs and build graphics software in a browser, eventually - but that’s not what matters at the beginning. They unlock something else.

What is that ‘something else’ for generative AI, though? How do you think conceptually about places where that error rate is a feature, not a bug?

What We Are Reading

👾 At $1 Billion, Has This Game Set Entertainment’s Spending Record? By letting fans directly fund an $800 million universe, Star Citizen is rewriting the rules of how massive entertainment projects are made. @Jane

💔 6 Lessons for Startups From a Museum Dedicated to Failure Not rocket science, but good reminders: The six forces of failure for startups include poor product-market fit, financial mismanagement, and good old bad timing. @Mafe

🚀 Is DeepSeek China’s Sputnik Moment? We may come to see the recent emergence of Deepseek’s shockingly competitive AI model as a signal of a broader set of trends: Chinese innovation leadership across a range of key technologies and Western overconfidence in the same domains (see also: BYD). @Jeffrey

💡 33 Things We’d Love to Redesign in 2025 The question “What if...” can widen many perspectives and be the source of some fantastic changes and innovations. To inspire you when you might ask that question and what answers you might come up with, we can explore what some IDEO folks are asking themselves. @Julian

🤝 SoftBank in Talks to Invest as Much as $25 Billion in OpenAI If the deal goes through, SoftBank would become OpenAI’s largest investor, surpassing Microsoft, with some of the equity investment potentially used for OpenAI’s commitment to Stargate. @Pedro

🏰 AI and Startup Moats With the continued frenzy over better, cheaper AI models, it is a good moment to pause and consider AI’s implications on moats – and other than what the title suggests, it’s not just startups that will see their moats dry up. @Pascal

Some Fun Stuff

🪡 Drama in the US Quilter’s Association: Why I’m Boycotting the American Quilter’s Society

👂🏼 Ear muscle we thought humans didn’t use — except for wiggling our ears — actually activates when people listen hard

This end quote struck a chord for me: Ya know that old saying ideas are cheap and execution is everything? Well it's being flipped on it's head by AI. Execution is now cheap. All that matters now is brand, distribution, ideas and retaining people who get it. The entire concept of time and delivery pace is different now.

- Geoffrey Huntley

> How do you think conceptually about places where that error rate is a feature, not a bug?

Oldest trope of the past year+ of AI: generative AI is *generative*. If you want it to filter and reproduce information retrieval, you're looking through the wrong end of the telescope.

Neural nets often prune nodes to improve generalized inference at the expense of specificity. We already have specificity down pretty well already without needing AI for it.