LLMs are getting brain rot (seriously)

Plus: Nike’s robot shoes, AI’s reverse Dunning-Kruger effect, and why open source might not survive the AI boom

Dear Friend –

Jane and I escaped to the Hawaiian island of Kauai for a quick getaway this week. Being on (and grappling with) “island time” is a good reminder that the frantic pace and seemingly never-ending barrage of news (especially AI) is a man-made phenomenon and not necessarily a reflection of reality. There might be a reason why Zuck is building his compound here… 😉

But fret not – I’ll be back in busy mode soon enough.

P.S. In case you love geeking out on the use of AI in the accounting profession, the Journal of Accountancy recently interviewed me for their podcast. Check it out here.

And now, this…

Headlines from the Future

First, We Had the Bicycle for the Mind. Now We Have the E-bike for Your Feet! Our friends at NIKE just showcased their Project Amplify – a robotic brace which boost your walking speed. Walk on!

↗ Nike says its first ‘powered footwear’ is like an e-bike for your feet

━━━━━

LLMs At Risk for Brain Rot. Expose an LLM to junk text in it’s training and the LLM will develop a condition akin to “brain rot.“ A new paper tested this hypothesis and found that large language models are indeed perceptible to ”non-trivial declines“ in their capabilities when exposed to tainted training material. Which will become an increasingly large problem, as all major LLMs have already been trained on whatever is available as text out there – and are now being trained on content which, in many cases, was created with the help of, or completely by, AI.

The decline includes worse reasoning, poorer long-context understanding, diminished ethical norms, and emergent socially undesirable personalities. […] These results call for a re-examination of current data collection from the Internet and continual pre-training practices. As LLMs scale and ingest ever-larger corpora of web data, careful curation and quality control will be essential to prevent cumulative harms.

━━━━━

Don’t Bite the Hand That Feeds You. The reason LLMs are so good at coding is due to the vast amount of training data available to them from open-source code repositories on sites like GitHub, as well as Q&A sites like Stack Overflow. Stack Overflow is essentially dead; open source might be next...

But O’Brien says, “When generative AI systems ingest thousands of FOSS projects and regurgitate fragments without any provenance, the cycle of reciprocity collapses. The generated snippet appears originless, stripped of its license, author, and context.” […] O’Brien sets the stage: “What makes this moment especially tragic is that the very infrastructure enabling generative AI was born from the commons it now consumes.

↗ Why open source may not survive the rise of generative AI

━━━━━

The Use of AI Imagery for Humanitarian Causes. NGOs and non-profits increasingly rely on AI-generated images for their awareness and fundraising campaigns, which comes with a boatload of problems.

AI does not exist in a vacuum. To make humanitarian-style images, AI learns from the existing stock of humanitarian imagery and the biases embedded in it. That corpus of images and stereotypes was produced by photographers over decades; arguably centuries, if we trace the lineage back to colonial photography and artwork. This is especially the case for the white saviour trope.

A very real problem and indicative of the inherent bias in AI systems.

↗ AI visuals: A problem, a solution, or more of the same?

━━━━━

AI’s ‘Reverse Dunning-Kruger’ Effect: Users Mistrust Their Own Expertise.

A new study reveals that when interacting with AI tools like ChatGPT, everyone—regardless of skill level—overestimates their performance. Researchers found that the usual Dunning-Kruger Effect disappears, and instead, AI-literate users show even greater overconfidence in their abilities.

↗ When Using AI, Users Fall for the Dunning-Kruger Trap in Reverse

━━━━━

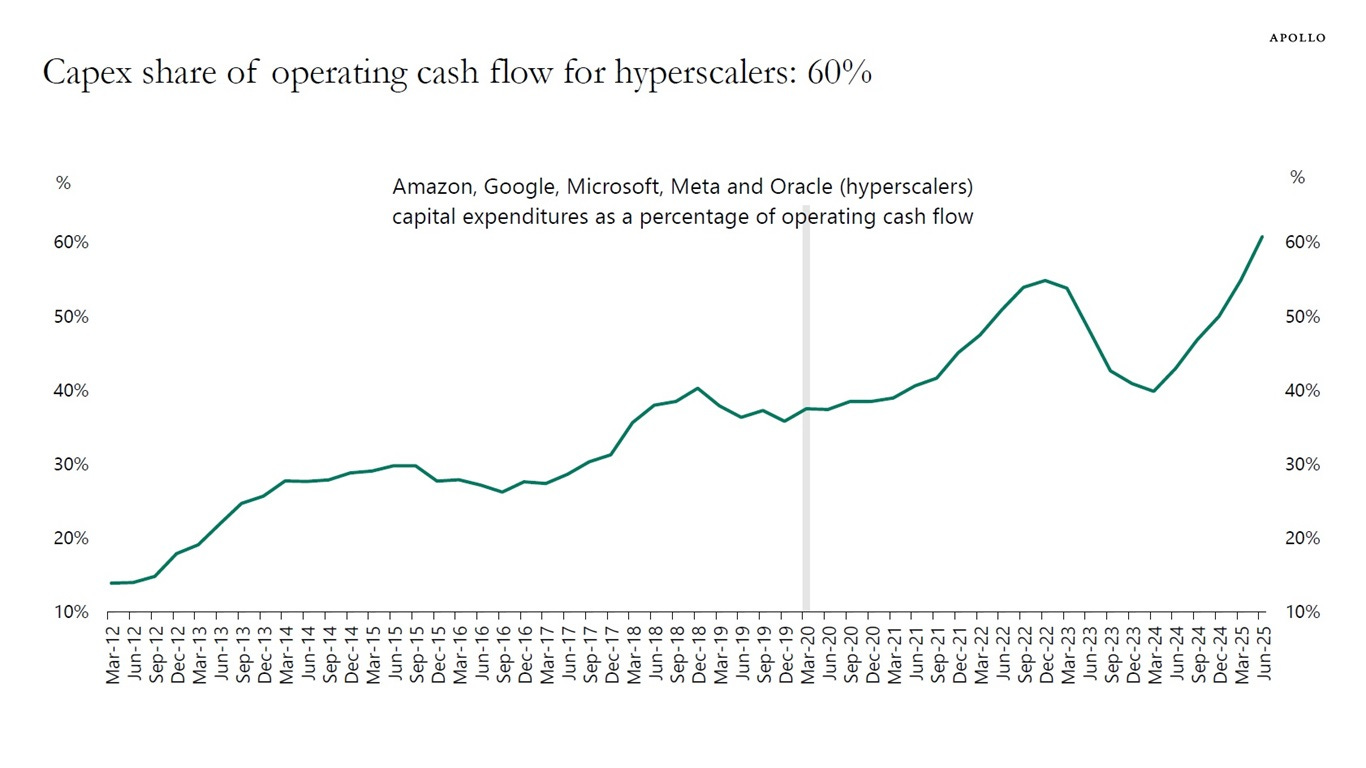

Chart of the Week. Hyperscalers’ (the Googles, Microsofts, OpenAIs of this world) CapEx spending on AI is getting crazy.

↗ Source

What We Are Reading

⚠️ Could the Internet Go Offline? Inside the Fragile System Holding the Modern World TogetherThe internet runs on creaky code and a few data centers that one tornado, one bug, or one bad day could take down—and nobody knows if we could turn it back on. @Jane

🎥 Starring in Videos Is No Longer a Job Just for the Social Media Team Employees “are among the most-trusted sources” for consumers when evaluating a brand, and companies are leveraging this for awareness, although controlling the message can be tricky. @Mafe

📈 Disaster Recovery Is Big Business While we all debate the potential economic impact of AI, the economic implications of another (clearer) megatrend continue to build. @Jeffrey

⚡ Inside the Relentless Race for AI Capacity A vivid 30-year timeline charts how the boom in data centers has escalated from niche infrastructure to super-hubs, and highlights how meeting that demand is now testing the limits of sustainable power supplies. @Kacee

🧠 The Brain Navigates New Spaces by ‘Flickering’ Between Reality and Old Mental Maps You might have heard me reference neurological studies which show that the hippocampus is responsible for both remembering things and your ability to imagine the future. When it comes to your brain, there is no future without referencing the past. Here is another take on this insight. @Pascal

Down the Rabbit Hole

💀 Britain’s most tattooed man says UK’s age check system told him to “remove his face”

🎮 What goes up, must come down: Counter-Strike’s player economy is in a multi-billion dollar freefall

🤔 I can’t even comment on this: Sam Altman Says If Jobs Gets Wiped Out, Maybe They Weren’t Even “Real Work” to Start With

👓 With a tiny eye implant and special glasses, some legally blind patients can read again

🧳 This is just sad: If you can’t afford a vacation, an AI app will sell you pictures of one

🖼️ Interesting: GenAI Image Showdown.

🏄🏼 AFP developing AI tool to decode Gen Z slang amid warning about ‘crimefluencers’ hunting girls

♠️ Another day, another AI battle. This one pits LLMs against each other in a game of poker.

👽 Where has all the weirdness gone? The Decline of Deviance

🙀 Talk about “unintended consequences”: Beloved Bodega Cat Allegedly Killed by Waymo in Mission District

Pascal is realizing that, for him, paper books beat Kindle eBooks any day (and not only on the beach).