The AI Peloton Effect: When Everyone’s Racing But No One’s Winning

Frontier models converge on identical capabilities while hype cycles crash into reality – plus the week’s most revealing AI contradictions

Dear Friend,

The other day I sat down with my former boss, founder and ex-CEO of Channel Advisor, Scot Wingo – truly one of the sharpest minds in e-commerce. We talked about all things disruption, the future, how to navigate the messy liminal space we find ourselves in today, and his latest venture ReFiBuy.ai. It’s a fascinating conversation – give it a listen.

And while you are at it, our very own Jeffrey Rogers was recently in conversation with Lisa Kay Solomon (one of our dearest colleagues) on the RedThread Research podcast, talking about “Why Your Strategic Plan Might Be Trapping You.”

And now, this…

Headlines from the Future

Artificial Analysis State of AI ↗

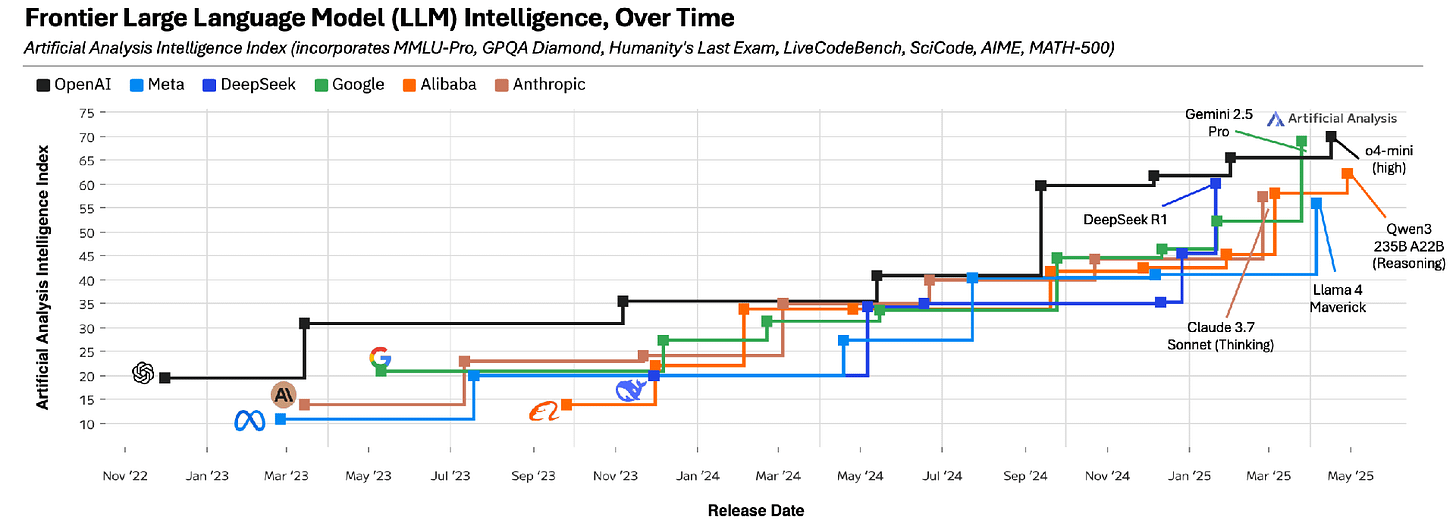

A recent (Q1/2025) report from Artificial Analysis delves into the capabilities and emerging trends of frontier AI models. The report identifies six key themes:

Continued AI progress across all major labs, the widespread adoption of reasoning models that “think” before answering, increased efficiency through Mixture of Experts architectures, the rise of Chinese AI labs rivaling US capabilities, the growth of autonomous AI agents, and advances in multimodal AI across image, video, and speech.

There is a lot to unpack in the report, but the key chart might be this:

All frontier models are converging on the same set of capabilities and the quality of those, which means that we will (and are already seeing) a brutal race to maintain their position in the peloton with further increased price pressure. This brings up the question of how these companies might justify their insane valuations…

—//—

The Wild Dichotomy of AI in Research ↗

Just this last week saw the announcement of new, sophisticated AI research tools from all the frontier labs, claiming exceptional results. Headlines such as “U. researchers unveil AI-powered tool for disease prediction with ‘unprecedented accuracy’” or “Microsoft’s new AI platform to revolutionize scientific research” gush about these new tools’ abilities.

Meanwhile, Nick McGreivy, a physics and machine learning PhD, shared his own experience with the use of LLMs in scientific discovery – and his story reads very differently:

“I’ve come to believe that AI has generally been less successful and revolutionary in science than it appears to be.”

He elaborates:

“When I compared these AI methods on equal footing to state-of-the-art numerical methods, whatever narrowly defined advantage AI had usually disappeared. […] 60 out of the 76 papers (79 percent) that claimed to outperform a standard numerical method had used a weak baseline. […] Papers with large speedups all compared to weak baselines, suggesting that the more impressive the result, the more likely the paper had made an unfair comparison.”

And in summary:

“I expect AI to be much more a normal tool of incremental, uneven scientific progress than a revolutionary one.”

And the discussion about what is hype and what is reality in AI continues…

—//—

Neal Stephenson on AI: Augmentation, Amputation, and the Risk of Eloi ↗

Science fiction author Neal Stephenson, who popularized the concept and term “metaverse” in his seminal book Snow Crash (1992), recently spoke at a conference in New Zealand on the promise and peril of AI.

His (brief but razor-sharp) remarks are well worth reading in full, but this quote stood out:

“Speaking of the effects of technology on individuals and society as a whole, Marshall McLuhan wrote that every augmentation is also an amputation. […] This is the main thing I worry about currently as far as AI is concerned. I follow conversations among professional educators who all report the same phenomenon, which is that their students use ChatGPT for everything, and in consequence learn nothing. We may end up with at least one generation of people who are like the Eloi in H.G. Wells’s The Time Machine, in that they are mental weaklings utterly dependent on technologies that they don’t understand and that they could never rebuild from scratch were they to break down.”

—//—

MIT Backs Away From Paper Claiming Scientists Make More Discoveries with AI ↗

Remember that MIT paper which showed that researchers leveraging AI are significantly more productive (as in: a higher number of discoveries), yet are less satisfied with their work (as they are relegated to drudgery while AI does all the hard, challenging, and exciting work)?

Well, it turns out that this was another case of “too good to be true.” MIT just recalled the paper, the researcher who published the paper isn’t affiliated with MIT anymore, and MIT states it “has no confidence in the provenance, reliability, or validity of the data and has no confidence in the veracity of the research contained in the paper.”

Ouch.

—//—

Learn Prompt Engineering From The Pros ↗

Want to get better at writing prompts (it’s a worthwhile investment of time – the difference in results from a mediocre prompt to a good prompt can be vast)? Here are a couple of excellent sources – straight from the horse’s mouth:

OpenAI has a whole site dedicated to their “AI Cookbook” - which includes, for example a ChatGPT 4.1 Prompt Guide.

Google has an excellent resource on prompt design strategies on their “AI for Developers” site.

And Anthropic (Claude) has something similar on their own developer documentation site – which even includes a prompt generator tool.

Happy prompt engineering!

What We Are Reading

🤖 AI Can Spontaneously Develop Human-Like Communication AI language models are capable of forming social communities and adopting shared norms, new research reveals. @Jane

🛒 Walmart Is Preparing to Welcome Its Next Customer: The AI Shopping Agent Anticipating the AI-driven future of e-commerce, retailers are rushing to adapt their infrastructure to a post-search, post-SEO world of marketing and discovery. @Jeffrey

👶 Source Code: My Beginnings by Bill Gates Focused on his early life rather than business triumphs, Gates’s memoir emphasizes the human side of innovation and the foundational experiences that shaped him. @Kacee

🚄 A High-Speed Future for Europe This case study is a great analysis of and inspiration for a European rail project. It beautifully connects the success attributes of a holistic European-wide rail system with the European identity, providing wonderful cultural relevance. @Julian

🔊 Compressed Music Might Be Harmful to the Ears As someone who listens to a lot of music through headphones, this raises some alarm bells. Also, four hours of Adele at 102 dB sounds like torture. Poor guinea pigs. @Pascal

Rabbit Hole Recommendations

We did the math on AI’s energy footprint. Here’s the story you haven’t heard.

Man shows up to job interview and finds out he’s being interviewed by AI

Elton John is furious about plans to let Big Tech train AI on artists’ work for free

China’s humanoid robots will not replace human workers, Beijing official says

Almost half of young people would prefer a world without internet, UK study finds

Happy Distractions

💾 Feel like your computer is getting slower despite it being “faster”? You are not alone: fast machines, slow machines.

⚡ Maybe don’t chug quite as many Red Bulls: Taurine (one of the active ingredients in energy drinks) has been linked to an increase in blood cancer.

🍗 The hottest chicken wings in Great Britain LIVE on ITV (“Maybe this was a mistake…”)

The AI Peleton effect on the producer side nicely mirrors the Red Queen effect (aka Red Queen hypothesis) forming on the AI consumer side. Simon Wardley posted a nice review of the latter this week on LinkedIn:

https://www.linkedin.com/posts/simonwardley_x-why-is-eric-schmidt-wrong-on-software-activity-7329914376973393922-J_u1/

Good stories of how AI is boosting scientific productivity... or maybe less so. Nearly four years ago Holden Karnofsky evangelized the idea of PASTA ("Process for Automating Scientific and Technological Advancement"), where AGI scientists would exponentially send scientific discovery into Moore's Law mayhem.

Many AI purveryors (I'm looking at you, Sama) and wannabe AI thought leaders (most recently, Eric Schmidt in his TED talk) have copypasta'd this idea as fact. I could never get over the false equivalence in its initial assumptions, as if binary bits on silicon extrapolating on a curve could embody an army of science friendly unit shifters.