AI Breaks The Goldilocks Zone

Why AI not only feels like cheating, but IS cheating—and why that is a problem.

In preparation for an upcoming webcast with the amazing Kacee Johnson at CPA.com on future trends, I uploaded the proposal for a set of new tax rules by the IRS with the exciting title “Certain Partnership Related-Party Basis Adjustment Transactions as Transactions of Interest” to Google’s NotebookLM (the same AI I asked to create a synthetic podcast of my book a few weeks ago). NotebookLM duly created a podcast (using the same overly enthusiastic male and female hosts as for all their podcasts), a study guide, and a bunch of other artifacts, as well as enabled me to ask follow-up questions about the document.

The podcast has gems like:

“And let me tell you, the IRS has, they’ve really cooked up something that has the accounting world buzzing.”

[…]

”They’re requiring more disclosure, which is never fun, and it could lead to a lot more scrutiny down the line.

More paperwork and a higher chance of getting audited.

You know how much accountants love that.”

[…]

“Until next time, keep those pencils sharpened and stay one step ahead of the IRS.”

All in all, it is rather remarkable—making a topic of which I know literally nothing (I am most definitely not a CPA) about “partnership taxation”—and yet I have gained a pretty good (and rather engaging) understanding of what the proposed rule is about, how it’s supposed to work, and why it matters. Now, I don’t have a way to check if any of what NotebookLM “said” is actually true or simply hallucinated (for this, I would need domain expertise), but at least on the surface, it all makes sense.

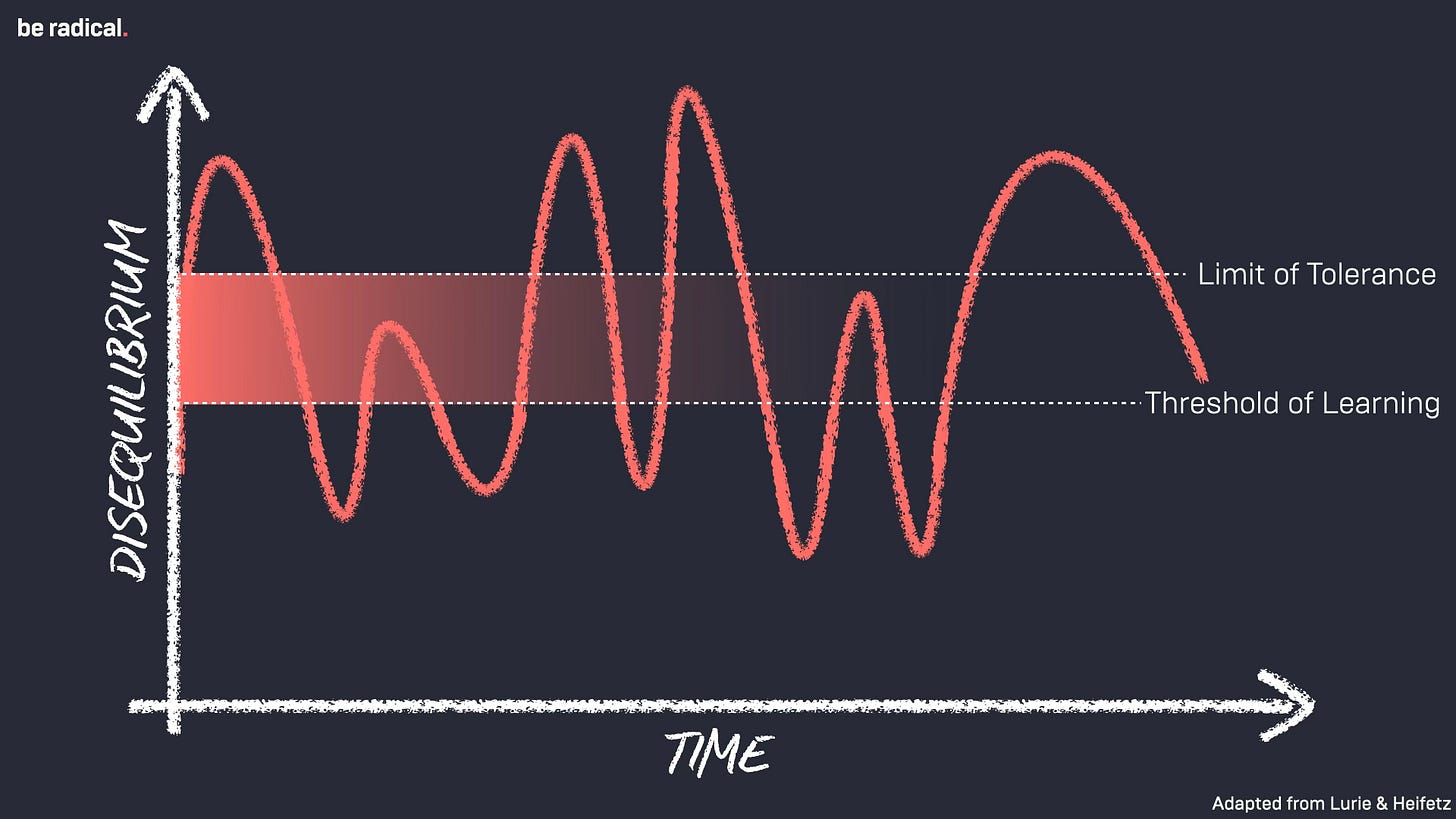

And herein lies the problem: Put aside that I have no clue if NotebookLM tells the truth; it is a good reminder of the delicate balance we must strike when we want to learn something new. Often referred to as the Goldilocks Zone of Learning (I learned this from Jeffrey)—a space where the complexity of the material is just right—it is the space where things are neither too easy, as we risk becoming complacent, nor too hard, where we become overwhelmed and disengaged.

In an age where AI tools like NotebookLM can simplify complex topics into digestible bites, there’s a real danger that we might not engage deeply enough with any source material. While these tools can provide us with a surface-level understanding, they often do so at the expense of true comprehension. When learning becomes too easy, we miss out on the cognitive struggle that is essential for deep learning (pun intended).

When faced with challenging material, our brains are forced to work harder. We grapple with concepts, make connections, and ultimately internalize knowledge in a way that is meaningful. This process is crucial for long-term retention and application. However, when AI takes the reins and oversimplifies content, it robs us of that struggle. The result? We skim the surface without ever diving deep enough to truly understand or remember.

Moreover, relying on AI-generated content can create a false sense of security. We might feel informed, but without rigorous engagement and critical thinking, we risk accepting information at face value. This is particularly concerning in fields like tax law, where nuances matter immensely and misinterpretations can lead to significant consequences.

To navigate this Goldilocks Zone effectively, we need to be intentional about our learning strategies and the struggle that comes with this. Mastery, after all, is a hard-won process, not a cool AI-generated podcast.

@Pascal

Really useful to ponder on and is something I've wrestled with about book summary apps. I've come to be comfortable that these give me access to a lot more books than I could ever read and from these I read/listen to those that pique my interest the most but not sure that's the best strategy!